This article is more than 1 year old

Highway to HBLL: The missing link between DRAM and L3 found

Chipzilla gets in on Last Level Cache design

A new cache is needed between memory and the tri-level processor cache structure in servers in order to avoid CPU core wait states.

That's the point of a Last Level Cache chip designed by Piecemakers Technology with help from Industrial Technology Research Institute of Taiwan and Intel.

As data travels from slow storage to a fast processor, it goes through layers of intermediate stores and caches, which need to have sufficient capacity and IO speed to keep the next layer up this stack busy. Any lack of capacity or access speed will cause the next layer up to wait for data and thus cause wasted CPU cycles, meaning slower application speed.

Piecemakers staffer Tah-Kang Joseph Ting presented at the International Solid State Circuits Conference (ISSCC) earlier this month and said DRAM latency had been stuck at 30ns for some time while successive DDR generations had increased its sequential bandwidth. There is, he says, a (relatively) large difference between this and the next layer in the memory hierarchy: Level 3 cache.

Jim Handy of Objective Analysis writes about this: "Furthermore, there's a much larger latency gap between the processor's internal Level 3 cache and the system DRAM than there is between any adjacent cache levels."

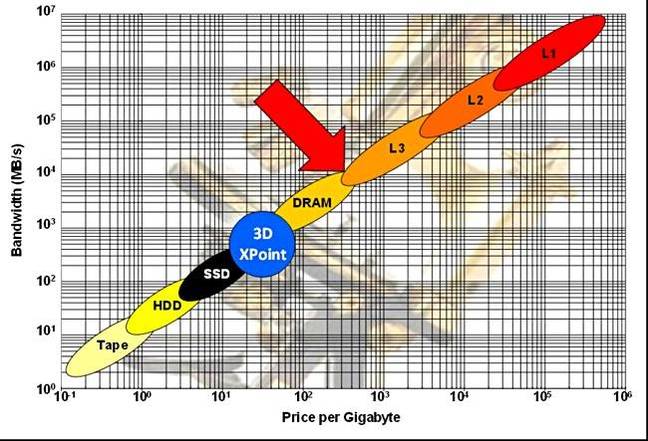

He has described how the Piecemakers chip fits in the memory hierarchy in a schematic chart showing storage and memory technologies in a 2D space defined by bandwidth and price per GB axes.

Red arrow indicates DRAM-L3 cache gap

We have seen Anandtech data saying that a Xeon E5-2690 L3 cache has 15-20ns latency.

Piecemakers' High-Bandwidth Low-Latency (HBLL) DRAM chip has a 17ns latency, partly achieved though interleaved RAM banks – 8 x 72-bit channels each accessing 32 banks – and also by using an SRAM rather than a DRAM interface.

So we envisage DRAM feeding data to this HBLL cache, which then fills the L3 cache, which then fills the L2 cache, which... and so on to the actual CPU, which can now work harder.

Intel provided funding for Piecemakers' work. Handy writes: "It appears that Intel is not only interested in filling the speed gap between DRAM and SSDs with 3D XPoint memory, but it also wants to fill the red-arrow gap with Piecemakers’ HBLL or something like it. Of course, we won’t know this for certain until Intel announces its plans." ®