This article is more than 1 year old

K2 promises faster data avalanche with NVMe drives

Kaminario claims you need scale-up and scale-out for full fabric access benefits

Interview NVMe adoption by array and storage software vendors is shaping up to be one of the single biggest transitions in the storage industry as the long-lived Fibre Channel SAN becomes the NVMeF SAN.

How does non-NVMe SSD-using all-flash array vendor Kaminario view NVMe adoption? We asked CTO Shachar Fienblit how and why and when this might happen.

El Reg: Will simply moving from SAS/SATA SSDs to NVMe drives bottleneck existing array controllers?

Shachar Fienblit: In short, yes. Modern all-flash arrays must support advanced software features including deduplication, compression, snapshots, replication, etc. The processing footprint of these data services make the controller CPU the main bottleneck in AFAs. NVMe drives will reduce the CPU footprint for the IO stack freeing 15-20 per cent of controller CPU capacity to do other things.

So moving to NVMe alone will deliver small improvements in IOPS and latency but the controller will still need to support advanced data services. Note that systems that are lacking advanced software features (critical to delivering overall cost efficiency and managing flash endurance) will demonstrate higher performance.

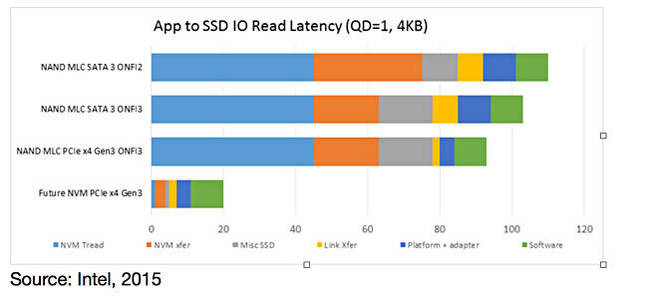

This graphic from Intel shows the latency cut of the NVMe stack versus the SCSI stack.

El Reg: Must we wait for next-generation controllers with much faster processing?

Shachar Fienblit: Next-gen AFAs will leverage new controller CPUs as well as standard offload engines. The bottleneck will continue to be the CPU processing to support advanced storage features like deduplication, compression, snapshots, and replication. New hardware technologies that offload processing, including compression-assist, encryption-offload and hash calculations, will be cost/performance optimising additions to the hardware stack. New processor technologies from Intel such as SkyLake, and the competing ARM technologies, will increase overall controller performance.

El Reg: Will we need affordable dual-port NVMe drives so array controllers can provide HA?

Shachar Fienblit: Ideally, we will see SSD vendors building affordable, high-capacity, dual-port NVMe drives. These drives would use similar flash to consumer drives to drive down cost. Advanced AFA software architectures should be optimised to work with the low-endurance drives in order to avoid the unnecessary premium of high-endurance drives. If SSA vendors do not pursue this route, AFA vendors may turn toward using interposers to transition between dual port to single port (similar to SAS-SATA interposers used today).

El Reg: What does affordable mean?

Shachar Fienblit: Affordable means dual-port NVMe drives that leverage the same flash used by consumer drives. Today it is 3D TLC SSDs. In the future it will be 3D technology with more layers and even QLC (4bits/cell or quad-level cell).

El Reg: Are customers ready to adapt NVMeF array-accessing servers with new HBAs and, for ROCE, DCB switches and dealing with end-to-end congestion management?

Shachar Fienblit: Adoption in networking protocols always takes longer than we think. We will see a transition period where customers will refresh their existing networking infrastructure. Despite the complexity of moving, there is significant value in the low-latency NVMeF connection which should accelerate the refresh cycle. As an intermediate solution, some customers will buy a storage array that supports NVMeF and will use soft ROCE to connect with hosts that does not support RDMA networks.

El Reg: Do they need routability with ROCE?

Shachar Fienblit: Not in the near future.

El Reg: Could we cache inside the existing array controllers to augment existing RAM buffers and so drive up array performance?

Shachar Fienblit: Yes, mainly metadata caching and caching for frequent data used in systems that support deduplication.

El Reg: With flash DIMMs, say? Or XPoint DIMMs in the future?

Shachar Fienblit: 3D Xpoint is a preferable way to scale the caching layer since it will be a cost-efficient solution that enables dense storage arrays.

El Reg: Does having an NVMe over fabrics connection to an array which is not using NVMe drives make sense?

Shachar Fienblit: It makes sense to cut some of the latency but the value is much more limited without the accelerated performance of NVMe drives.

El Reg: When will NVMeF arrays filled with NVMe drives and offering enterprise data services be ready? What is necessary for them to be ready?

Shachar Fienblit: As discussed above, the real benefit of NVMeF and NVMe will come when deployed together, with faster controllers and enterprise data services to take full advantage of the new latency advantages. Taken separately, the benefits will be nominal latency improvements.

Beyond pure performance increases, the real benefit of these new technologies will come from highly scalable array architectures that can both scale up and scale out by leveraging an architecture designed to decouple capacity from compute. Building a complete SAN software stack with the complete data services needed to deliver cost efficiency is a long and challenging process. It will be a faster process for those vendors with software architectures that already support this decoupled model.

+Comment

Kaminario is developing a scale-up and scale-out NVMe flash array architecture using NVMe-accessed shelves. We might imagine that from roughly around the second half of 2017 onwards we could be hearing more about these shelves and Kaminario's K2 array. ®