This article is more than 1 year old

Lies, damn lies and election polls: Why GE2015 pundits fluffed the numbers so badly

The lessons of shaping a mathematical 'reality'

Whatever you may think about the outcome of last Thursday’s General Election, there is one issue on which public, politicians and pundits alike seem to be broadly united: how badly the opinion pollsters fared. They got it very wrong!

Egregiously so, according to the editor of the Market Oracle, an online financial forecasting service. Nadeem Walayat wrote: “The Guardian and the rest of the mainstream press have effectively wasted tens of millions of £'s on worthless opinion polls.”

Is this fair? Whose fault is it that we have all spent the last five weeks absolutely convinced that the future was multi-party? And does it really matter?

Let’s start with what the polls were telling us. In that key pre-election month, no significant poll was forecasting anything other than a hung parliament. Precise figures varied from poll to poll, but the average was fairly consistent. No party was pulling ahead dramatically in percentage terms; therefore there was no party with an overall majority.

Take the ICM poll of polls, reported in The Guardian on the morning of the election and based on a weighted average of all constituency level polls, national surveys and polling in the regions. This put Tories and Labour within a whisker of one another around the 34 per cent mark.

From that, The Guardian suggested a dead heat, with both parties ending up on 273 seats, the SNP capturing 52, and the Lib Dems hanging on to 27.

There were, in fact, two quite separate failures which have been elided into one by the popular press – and two separate explanations. The first relates to share of vote.

| Party | Predicted votes | Actual votes |

|---|---|---|

| Conservative | 34 | 36.9 |

| Labour | 33.5 | 30.5 |

| UKIP | 12.5 | 12.6 |

| Lib Dem | 9 | 7.8 |

| SNP | 4.7 | |

| Green | 11 | 3.8 |

| Other | 3.7 | |

| Total | 100.0 | 100.0 |

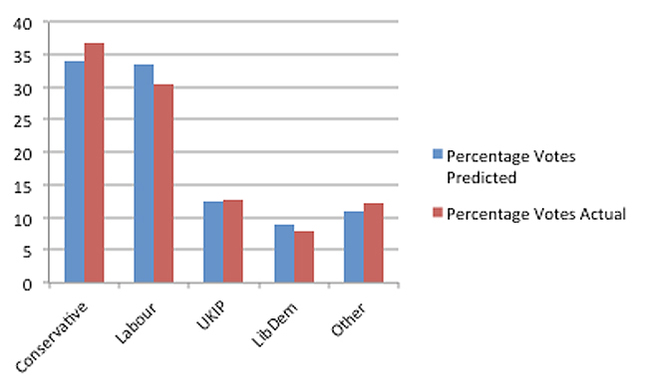

Table 1: The ICM Poll of Polls vote forecast (6 May 2015) vs. actual votes cast (7 May 2015)

The figures above and just below are far less out than you might suppose. UKIP, Lib Dem and Other were all respectably close to the actual result. Neither Lib Dems nor the Greens surprised us by taking 50 per cent of the vote on the day. If such a proposition sounds fanciful, it helps to focus what we might consider to be a truly inaccurate forecast.

ICM Poll of Polls vote forecast (6 May 2015) vs. actual votes cast (7 May 2015)

The real problem lies in the Labour/Tory difference of some six and a half percent.