This article is more than 1 year old

CERN IT boss: What we do is not really that special

You'll all be doing the same - in about 10 years' time

When the head of infrastructure services at CERN tells you that he has come to the conclusion that there’s nothing intrinsically “special” about the systems at the multi-billion atom-smasher, you naturally want to check you’ve heard correctly.

After all, when we sat down with Tim Bell at the OpenStack Summit in Paris recently, it was rather noisy as around 5,000 visibly excited engineers techies swapped war stories about the open cloud computing platform while vendors hurled hospitality and job offers at them.

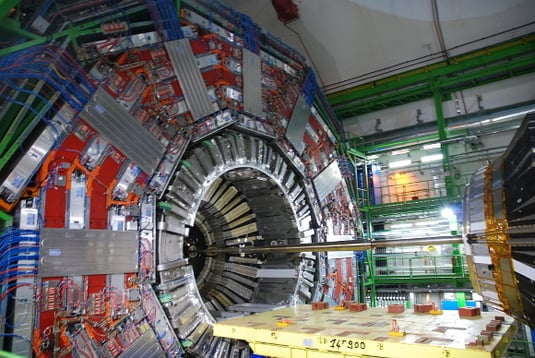

Few of them, however, would be running the sort of systems for which Bell and his team are responsible: a 100PB archive growing at 27PB a year, with 11,000 servers supporting 75,000 disk drives and 45,000 tapes. And that data is being thrown off by the machine that recently found the Higgs Boson, the so-call God particle. Most tech managers would say that’s up the upper end of data infrastructure challenges.

But as Bell continued, some of his issues certainly sounded familiar. The Large Hadron Collider itself is in the midst of an upgrade which will double the amount of energy produced, and the supporting systems at CERN have to keep up.

When your IT system has to keep up with the Large Hadron Collider

The LHC is due to fire up again in April, and Bell and his team have been retooling the IT infrastructure since 2013. As of the beginning of this month, CERN is currently running four Openstack-based clouds, the largest consisting of 70,000 cores running across 3,000 servers, while three other instances clock up a further 45,000 cores. The total number of cores should hit 150,000 in the first quarter of next year. Just in time for the reboot of the LHC.

But two years ago, when Bell and his team started planning for the upgrade, it was time to do some hard thinking - a not uncommon practice at CERN you’d assume. Even the world of top-end physics has to operate within human laws such as economics – to some degree anyway. And, according to Bell, this means no more staff, a decreasing materials budget, and legacy tools that are “high maintenance and brittle”. And just in case you were wondering, the “users” expect fast self-service.

“The big thing in this case was to apply that to the IT department ... we were basically challenging some fundamental assumptions that CERN has to create its own solutions. That they’re special.”

He continued, “When you go further you need to start challenging those assumptions that led software to be developed locally at CERN rather than taking open source and contributing to it.”

So, that double-take again: CERN IT is not special? Really?

Thinking differently

“There are clearly some special parts,” says Bell. “But there are also often things that are of interest to other people. The key thing to avoid is where we end up doing something that is similar to what is being done outside.”

And what crystallised that realisation? “We had a moment where we worked out just how much computing resources we were going to have to give to the physicists next year.”

“It was a point where we were confronted by a problem that was difficult we had to step back. It wasn’t going to be solved by doing a little bit extra - we had to basically rethink things from the beginning...and I think that helped to set a few ideas in place.” Sound familiar?

It’s not as if Bell’s team were completely starved of resources. The IT on-site at CERN has been supplemented by a new data centre in Hungary. Even so, Bell continues, “What we needed to appreciate was the extent to which the organisation needed to change as well as it just being a matter of installing some more servers.”

Hence the decision to get up close and personal with OpenStack in general and Rackspace in particular. It might be worth noting that, back when we wrote this, the firehose Bell’s team was drinking was pumping out a mere 25PB a year.

“After a few months of prototyping then we had the basis to set in place something where we could map out the roadmap to retire the legacy and the legacy environment. The decommissioning of it started on the 1st of November,” Bell says. “So in 18 months we basically produced a tool chain [which is] replacing the legacy environment that we’d run for the previous 10 years.”

OK, so that still sounds a bit special. But like every organisation, Bell’s experienced a few bumps as experienced hands went cold turkey on the “not invented here” approach.

“That’s involved a lot of work with the people looking after the services and helping them with some training - either formal or informal - in order to use the new tools. But so far we’ve had a lot of positive feedback of the new tools, so that’s all helped to get people on board.”

And the formal training has often come from youngsters straight out of college, who are familiar with the new tools and new ways of doing things. While this is perhaps an inversion of the traditional way IT is run, it is also increasingly common. Or at least commonly talked about. And it’s also faster, at least in theory, than having a constant tide of recruits having to get familiar with tools they will only find in one organisation.

“Many times people are joining CERN with the knowledge of the tools from university,” says Bell. “So it means that the training time is considerably less - you can buy a book that will tell you about Puppet whereas in the past you would have had to sit down with the guru to understand how the old system worked.”

No one’s suggesting that disgruntled older hands are getting the hump and leaving. But Bell says CERN has always been structured “to assume a regular turnover” of staff from summer interns, to fellows programmes.

“As part of CERN’s mission, it’s not only the physics. There is a clear goal for CERN to act also as a goal for people to arrive spend a short period of time at CERN - up to five years on short term contract - and then return to their home countries with those additional skills. That could be engineering, [equally] it could be physics and computing.”

“Now in this case what’s great is that we take a Linux expert out of university and we produce someone that’s trained in Openstack and Puppet and they find themselves in a lot of demand at such time as they have a contract at CERN finish.”