This article is more than 1 year old

EMC: I Have A Dream - of ABBA in every HPC setup

When you need to nuke an asteroid, take a chance on us

EMC has had a dream, a flash appliance called ABBA that helps mega-node HPC set-ups run faster and smoother.

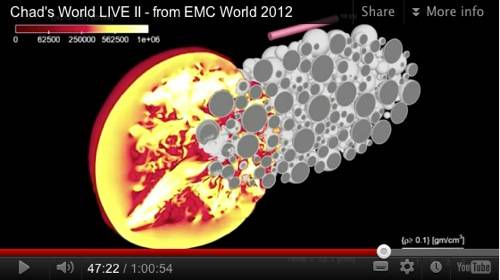

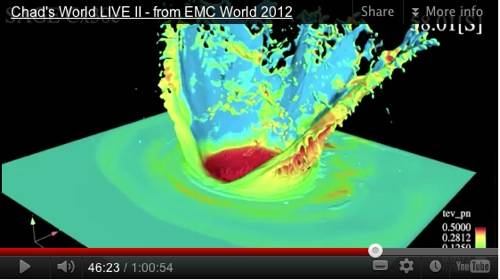

ABBA is an acronym for the Active Burst Buffer Appliance. It's designed by Los Alamos National Labs (LANL) and EMC to help in massive tightly-coupled high-performance computing (HPC) where nodes need to interact and not have to restart jobs if one or more nodes fail. Apparently - I'm no HPC expert - HPC jobs involving half a million compute cores, such as a Los Alamos National Laboratories one simulating a nuclear weapon strike on an asteroid, have a series of checkpoints set up in their code with the entire memory state stored at each checkpoint in a storage node.

LANL checkpoints every four hours today onto a storage subsystem with roughly 30,000 spindles. If a node fails, it can restart the job at the preceding checkpoint, using the backup data on the storage nodes instead of having to go back to the beginning. The compute nodes have to stop their calculations when they write to the storage nodes. As the HPC job time increases and/or the number of nodes increase then the amount of wasted app compute time increases.

With half a million compute nodes it takes time to transfer masses of data, petabytes of the stuff, to the storage nodes and also restore it when nodes fail. Wouldn't it be a good thing if this could be done using flash-based storage nodes instead of disk drive-based ones? That would speed things up by getting rid of disk drive latency and also steer the set-up away from disk failures as well.

The flash storage nodes could connect to the compute nodes across higher speed links than the HDD-based nodes to make things faster still. With two flash appliances per compute node then one could receive the latest checkpoint data while the second could be writing the previous checkpoint data to disk, enabling you to have more checkpoints, reducing the delay caused by a job restart even more, and keeping the compute nodes operating more continuously.

If the HPC setup has IO nodes interconnecting the compute and storage nodes then flash-based storage nodes could be the IO nodes as well, simplifying the overall design.

ABBA is this flash storage node and it is intended for fast big data situations, like the LANL ones. If HPC continues its massively parallel growth, then theoretically we are heading towards a billion cores and exaflop computing. EMC scientists Sorin Faibish and John Bent in the Fast Data Group in EMC's Office of the CTO are working on ABBA with others. They have produced a detailed slide deck (pdf).

In it they make the point that ABBA is file system-agnostic and suggest using ABBA could improve compute efficiency by 40 percent, where compute efficiency is the app compute time divided by the sum of the app compute tine and checkpoint time.

ABBA nodes could also provide co-processing analysis and visualisation of the HPC jobs. Virtual Geek has more information, including some videos:-

- Chad's World LIVE II from EMC World 2012. Fast forward to 44.30 to get to the ABBA part. This is a really cool video. Who is the dancing queen?

- Simplifying HPC Architectures.

- Demystifying Fast Data which looks at the Los Alamos challenge.

There is also a Big Ideas video mentioned.

Ah, EMC and flash; the winner takes it all. ®