This article is more than 1 year old

Pure Storage is betting its FlashArray farm on NVMe

Adoption starts in 2017 and will be leading flash interface protocol by 2019

+Comment At a high level, Pure believes NVMe is poised to unlock the next generation of performance and density gains, and any modern all-flash array needs to be ready to take advantage. It plans to enable NVMe with tier 1 resiliency and enterprise data services for everyone, refusing to see it as expensive, exotic, high-performance niche technology.

NVMe, says Pure, brings massive parallelism to unlock the serial bottleneck of SAS/SATA interfaces, and will likely become the leading interface protocol for flash by 2019.

El Reg: Will simply moving from SAS/SATA SSDs to NVMe drives bottleneck existing array controllers?

Pure Storage: Yes, and that's the right thing to do. Ideally, a shared storage system is limited at the front-end (controller CPUs), because that characteristic provides the best latency up until the throughput is saturated. (There is no value in having a fast storage system with internal back-end queues sitting full.) Assuming back-end bottlenecks are removed, storage systems that are architected for non-disruptive upgrades to next-generation controllers will be able to deliver continual performance increases with annual compute advances in line with Moore’s Law.

Along with large capacity NVMe SSDs, this means that a system can be performant and very dense. But it doesn't mean that adding more SSDs is a problem – adding more NVMe SSDs means that the system scales up as well as down – with consistently low latency. In fact, a large system will have better read tail latency, which is key to many apps, particularly distributed apps.

El Reg: Must we wait for next-generation controllers with much faster processing?

Pure Storage: No, we do not need to wait for next-generation CPUs, as long as NVMe connectivity is supported on the controller. NVMe is more efficient than SAS/SATA, so systems will get a nice boost from simply replacing the drives. The result can be faster total performance (or reduced cost by getting the same performance from smaller CPUs), increased performance density (so you get the maximum performance even on the smallest configuration), increased footprint density (with bigger NVMe drives), and greater consolidation – all with consistently low latency. Results will vary depending on the array architecture, especially on the software front.

El Reg: Will we need affordable dual-port NVMe drives so array controllers can provide HA?

Pure Storage: Yes. Affordable dual-ported NVMe drives are needed for NVMe to be adopted broadly in the enterprise, and we believe that such drives will be available sooner than most think.

El Reg: What does affordable mean?

Pure Storage: We think affordability means being able to replace your entire Tier 1 Hybrid storage footprint with a similar or better TCO using NVMe all-flash.

El Reg: Are customers ready to adapt NVMeF array-accessing servers with new HBAs and, for RoCE, DCB switches and dealing with end-to-end congestion management?

Pure Storage: Some customers are ready to adapt them, and some aren't. An array with NVMe internally can provide benefits transparently for those who aren't adapting for NVMeF. Folks in the know are already using RDMA (RoCE specifically), and seeing the dual benefits of performance (lower latency, higher throughput) and CPU offload (RDMA offloads the equivalent of ~20 cores at 50Gbps versus TCP).

DCB and PFC are standard in modern switches, so the Ethernet is "just there". Advanced end-to-end congestion management is interesting for optimising network utilisation and fairness, but it's icing on the cake. Those schemes must either be agreed between the storage vendor and the customer, or a de-facto standard method must emerge.

El Reg: Do they need routability with RoCE?

Pure Storage: Most folks are looking for NVMeF as a top-of-rack solution, where L2 forwarding is acceptable. RoCEv2 is available and is a good starting point, but routing L3 has been a challenge for other protocols and will take work.

El Reg: Could we cache inside the existing array controllers to augment existing RAM buffers and so drive up array performance?

Pure Storage: Of course. At the same time, DRAM is expensive, so what to cache and how much cache to provide is an interesting optimisation problem. Writes should obviously be committed to a stable, non-volatile module. For example, our NVRAMs are external to the controllers, dual-ported NVMe with DRAM backed by flash. For reads, a balanced approach between performance and economics is to cache metadata aggressively and bulk data opportunistically.

El Reg: With flash DIMMs say? Or XPoint DIMMs in the future?

Pure Storage: These technologies can provide some benefits, but mostly for fast power-on time (warm cache). Given that dual controllers are a requirement for HA, those benefits are mostly wasted on fast boot – adding cost, complexity, and new failure modes during all of runtime. XPoint will be another quantum leap in performance - let's talk about that when it's eventually available.

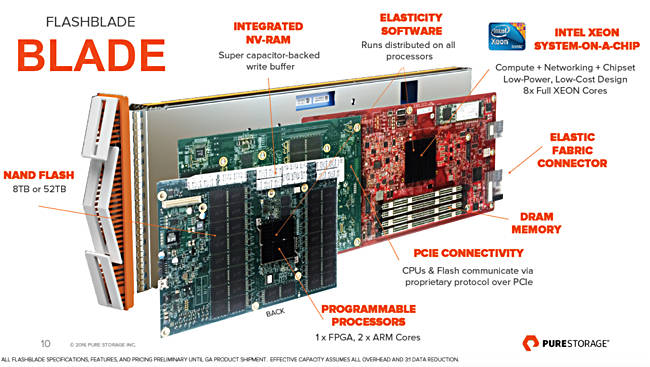

Pure Storage FlashBlade blade

El Reg: Does having an NVMe over fabrics connection to an array which is not using NVMe drives make sense?

Pure Storage: Sure. Just as NVMe in the back-end brings benefits without NVMeF, you can use the front-end alone. There is a benefit from eliminating two SCSI stacks (storage system's and the server's) as well as the benefits of RDMA. Obviously you don't have end-to-end NVMe, but you get to take advantage of the networking NVMeF programming model and some (not full) performance gain from NVMe.

El Reg: When will NVMeF arrays filled with NVMe drives and offering enterprise data services be ready? What is necessary for them to be ready?

Pure Storage: We expect that NVMe arrays with internal NVMe and offering enterprise data services will be ready by end of 2017 (or sooner). In fact, Pure has announced that upgrades to NVMe-enabled controllers are planned to be generally available prior to 31 December, 2017.

Timing for NVMeF arrays with end-to-end NVMe (from server to storage and within the storage system) and offering enterprise data services is likely to be further out in time, given the additional dependency on the ecosystem.

Given the pending arrival of NVMe, anyone buying a storage array today should be seeking to ensure that the storage array is future-proofed for NVMe, in order to protect their investment.

+Comment

In Pure's view customers can take advantage of NVMe in two ways: by leveraging NVMe internally within the storage system and/or leverage NVMe over Fabrics (NVMeF) for server-to-storage connection. Both approaches will materialise independently, and will offer the benefit of increased performance, density, and consolidation.

Pure is an NVMe evangelist. It says that anyone wishing to learn more about NVMe, and the firm's own FlashArray//m, naturally, can register at this website to view its "Outside The Box" episode 2 on December 6.

From our point of view, Pure appears to wish to adopt and drive the NVMe approach forward as fast as it can, and harder than other mainstream AFA vendors. Its point about the benefits of using NVMe fabrics access to a non-NVMe drive-using array is not one we've seen made by any other supplier.

Its upgrade strategy seems sound as customers shouldn't stop buying the current FlashArrays in anticipation of getting faster NVMe-using FlashArrays. They'll get them anyway. HPE is adopting a similar stance and we can see it becoming widespread. NVMe drive and fabric adoption could become the main SAN talking point in 2017. ®