This article is more than 1 year old

New science: Pathetic humans can't bring themselves to fire lovable klutz-bots

All is forgiven, you gaffe-prone cyber-cooks. You're one of us

A university study has found that adding basic facial expressions to a robot can be enough to forge an emotional bond with humans.

Researchers with University College London and University of Bristol in the UK found that when humans were paired with a robot that displayed facial expressions of remorse, the fleshy overlord was both more patient and forgiving, and in some cases even showed concern for the feelings of the robot.

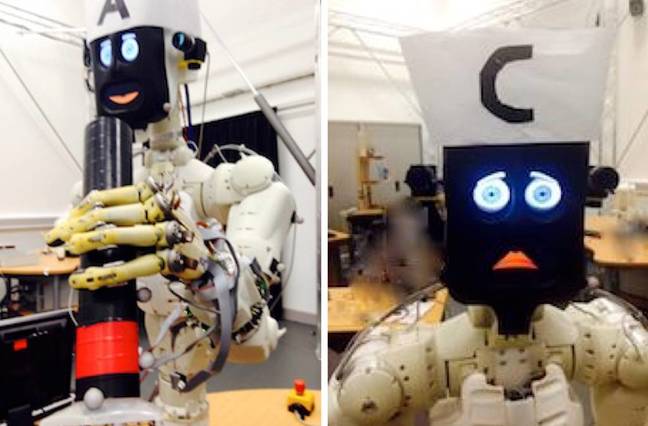

The study [PDF] partnered the human subjects with a trio of robots, two of which were non-communicative and one that could talk and pull facial expressions.

The human-robot pair was then given a series of basic kitchen tasks in which the robot acted as the "assistant" to the human. The droids were also set up with varying levels of efficiency: the talking robot was programmed to make mistakes such as dropping eggs.

What the researchers found was that the humans not only preferred the talkative robot, but were also more willing to forgive the mistakes and side with the chatty clumsy bot over the more efficient non-expressive models.

"Our results suggest that users are likely to prefer an expressive and personable robot, even if it is less efficient and more error prone, than a non-communicative one," the researchers concluded.

"It suggests that a robot effectively demonstrating apparent emotions, such as regret and enthusiasm, and awareness of its error, influences the user experience in such a way that dissatisfaction with its erroneous behavior is significantly tempered, if not forgiven, with a corresponding effect on trust."

Additionally, the researchers found that the bond the humans formed with the robot was so deep that the subjects expressed concern for the unit's feelings. After being paired with the intentionally mistake-prone unit, the subjects were asked by the expressive robot if they would hire it as a kitchen helper.

The researchers said that a number of the subjects became "visibly uncomfortable" when asked the question, with one even reporting that, after answering "no" they thought the robot face was "very sad" despite the unit not being programmed to show any response to the question.

The study suggests that when building robots to operate with humans in work environments, adding basic expressions and communication skills go a long way to helping the humans feel more comfortable, even when that personability comes at the cost of efficiency.

Basically, if you're a cheery but ultimately useless drone, you're hired, and if you're a stone-cold reliable pair of hands, no one likes you. Robots aren't going to kill us all – no, instead, we're going to drag 'em down into the pits of mediocrity. ®