This article is more than 1 year old

My self-driving cars may lead to human driver ban, says Tesla's Musk

Nvidia touts $10,000 DIY robo-car brains, neural-net motorists in the cloud

GTC 2015 Self-driving cars are "almost a solved problem," Tesla Motors boss Elon Musk told the crowds at Nvidia's GPU Technology Conference in San Jose, California.

But he fears the on-board computers may be too good, and ultimately encourage laws that force people to give up their steering wheels. He added: "We’ll take autonomous cars for granted in quite a short time."

"We know what to do, and we’ll be there in a few years," the billionaire SpaceX baron said.

Musk is no stranger to robo-rides: the Tesla Model S 85D and 60D have an autonomous driving system that can park the all-electric cars. Now he thinks Tesla will beat Google at its own game, and conquer the computer-controlled driving market, even though he's cautious of a world run by "big" artificial intelligence: "Tesla is the leader in electric cars, but also will be the leader in autonomous cars, at least autonomous cars that people can buy."

Although, while speaking on stage at the conference on Tuesday, Musk said human driving could be ruled illegal at some point, he clarified later on Twitter: "When self-driving cars become safer than human-driven cars, the public may outlaw the latter. Hopefully not."

It's no coincidence Musk was making noises about AI-powered cars at the Nvidia event: the GPU giant is going nuts for deep-learning AI, and just officially unveiled its Titan X graphics card, which it hopes engineers and scientists will use for machine learning.

And if you want to be like Musk, and develop your own computer-controlled car, Nvidia showed off its Drive PX board that will sell for $10,000 in May. The idea here is to have a fleet of cars, each with a mix of 12 cameras and radar or lidar sensors, driven around by humans, and data recorded by the team uploaded to a specialized cloud service.

This data can be used to train a neural network to understand life on the road, and how to react to situations, objects and people, from the way humans behave while driving around. The Drive PX features two beefy Nvidia Tegra X1 processors, aimed at self-aware cars.

Each X1 contains four high-performance 64-bit ARM Cortex-A57 compute cores, and four power-efficient Cortex-A53s, lashed together using Nvidia's own interconnect, and 256 Maxwell GPU cores.

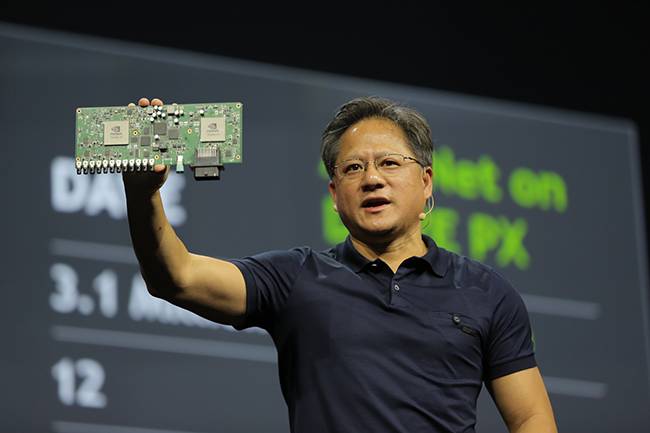

Car brain ... Jen-Hsun Huang, Nvidia CEO, holds up the Drive PX circuit board

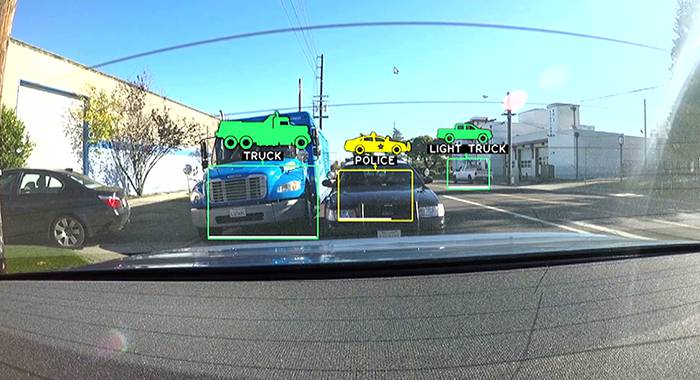

Nvidia engineers have already apparently captured at least 40 hours of video, and used the Amazon Mechanical Turk people-for-hire service to get other humans to classify 68,000 objects in the footage. This information – the classification and what they mean in the context of driving – is fed into the neural network. Now the prototype-grade software can pick out signs in the rain, know to avoid cyclists, and so on.

Nvidia's self-driving car software ... picking out what's on the road

This trained neural network is transferred to the Tegra X1 computers in each computer-controlled car, so they can perform immediate image recognition from live footage when on the road.

The car computers can't learn as they go while roaming the streets. Deep-learning algorithms take days or weeks to process information and build networks of millions of neuron-ish connections to turn pixels into knowledge – whereas car computers need to make decisions in fractions of a second. This is why the training has to be done offline.

It's like a human learning how to drive: spend a while being taught how to master the vehicle, and then apply that experience on the road.

The Drive PX-controlled cars can also upload live images and other sensor data to the cloud for further processing if a situation confuses it, or it isn't sure it handled it right – allowing the fleet to improve its knowledge from experience even after the bulk of the neural net training has been completed.

And, of course, Nvidia would love you to use its GPUs and Digits learning software in backend servers. It's already touting the Digits Devbox: that's a $15,000 GNU/Linux-powered machine for programmers that has four Titan X cards, and will go on sale in May.

"An entire fleet of vehicles can upload data to the cloud for further training via new data sets," Danny Shapiro, Nvidia's senior director of automotive, told The Register during a press Q&A at the GPU Tech Conference. "We’re bringing deep-learning into autonomous driving. We can have the car understand all the subtle nuances to mirror human drivers.

"Drive PX has not been deployed yet; it's going out to developers in May. Many people are waiting to receive it. Audi and Google are already using Nvidia technology, though, in autonomous car tests.

"There's a huge amount of cooperation between us and our automotive partners, but not between the automakers: each has their own goals and products and research. There is more and more focus on the computational horsepower that’s needed here: automakers are coming to us to deliver a mobile supercomputer."

He added that people with self-driving cars may have to apply for special driving licenses to allow their computer-controlled cars on the road: "Audi is working with the California DMV [Department of Motor Vehicles] to work out autonomous driving license ... We’ll see more regulation in that area."

We'll have more coverage on Nvidia's deep-learning and motors as this week's GPU conference progresses. ®